What You Need to Know About Nvidia's AI Announcements at CES 2025 - Decrypt

01/07/2025 22:53

Jensen Huang unveiled the company's most ambitious consumer tech lineup, including personal AI computers, agent frameworks, media tools, and the new GeForce RTX 5000 Series GPUs.

After a record-breaking 2024, Nvidia is kicking off 2025 with a bang, unveiling a slate of products that could solidify its dominance in the fields of AI development and gaming.

CEO Jensen Huang took the stage at CES in Las Vegas to showcase new hardware and software offerings that span everything from personal AI supercomputers to next-generation gaming cards.

Nvidia's biggest announcement: Project DIGITS, a $3,000 personal AI supercomputer that packs a petaflop of computing power into a desktop-sized box.

Built around the new—and up until now, secret—GB10 Grace Blackwell Superchip, this machine can handle AI models with up to 200 billion parameters while drawing power from a standard outlet.

For heavier workloads, users can link two units to tackle models up to 405 billion parameters.

For context, the largest Llama 3.2 model, the most advanced open-source LLM from Meta, has 405 billion parameters and cannot be run on consumer hardware.

Up until now, it required around 8 Nvidia A100/H100 Superchips, each one costing around $30K, totaling more than $240K just in processing hardware.

Two of Nvidia’s new consumer-grade AI supercomputers would cost $6K and be capable of running the same quantized model.

“AI will be mainstream in every application for every industry. With Project DIGITS, the Grace Blackwell Superchip comes to millions of developers,” Jensen Huang, CEO of Nvidia, said in an official blog post. “Placing an AI supercomputer on the desks of every data scientist, AI researcher, and student empowers them to engage and shape the age of AI.”

For those who love technical details, the GB10 chip represents a significant engineering achievement born from a collaboration with MediaTek.

The system-on-chip combines Nvidia's latest GPU architecture with 20 power-efficient ARM cores connected via NVLink-C2C interconnect.

Each DIGITS unit sports 128GB of unified memory and up to 4TB of NVMe storage. Again, for context, the most powerful GPUs to date pack around 24GB of VRAM (the memory required to run AI models) each, and the H100 Superchip starts at 80GB of VRAM.

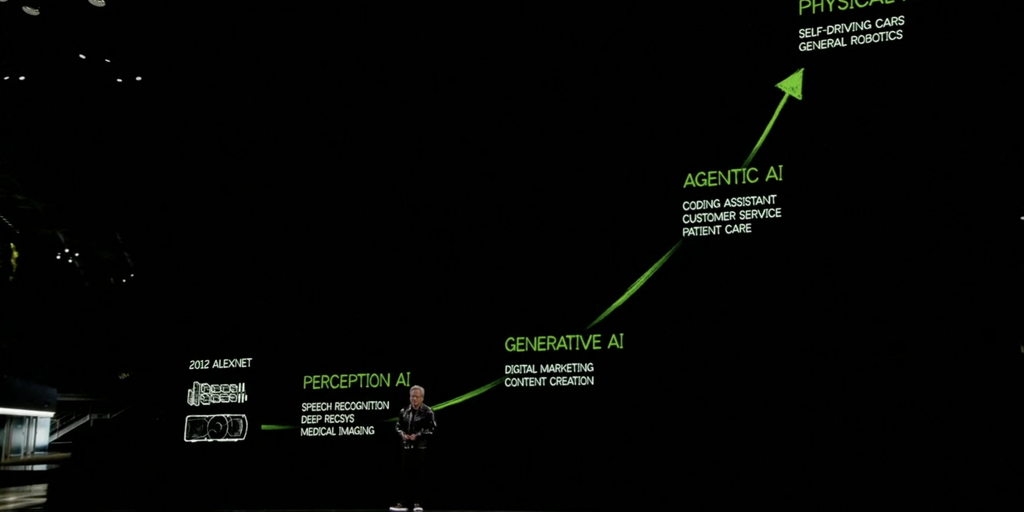

Nvidia's plans to dominate AI agents

Companies are rushing to deploy AI agents, and Nvidia knows it, which is probably why it developed Nemotron, a new family of models that comes in three sizes, and announced its expansion today with two new models: Nvidia NIIM for video summarization and understanding and Nvidia Cosmos to give Nemotron vision capabilities—the ability to understand visual instructions.

Until now, the LLMs were only text-based. However, the models excelled at the following instruction: chat, function calls, coding, and math tasks.

They're available through both Hugging Face and Nvidia's website, with enterprise access through the company's AI Enterprise software platform.

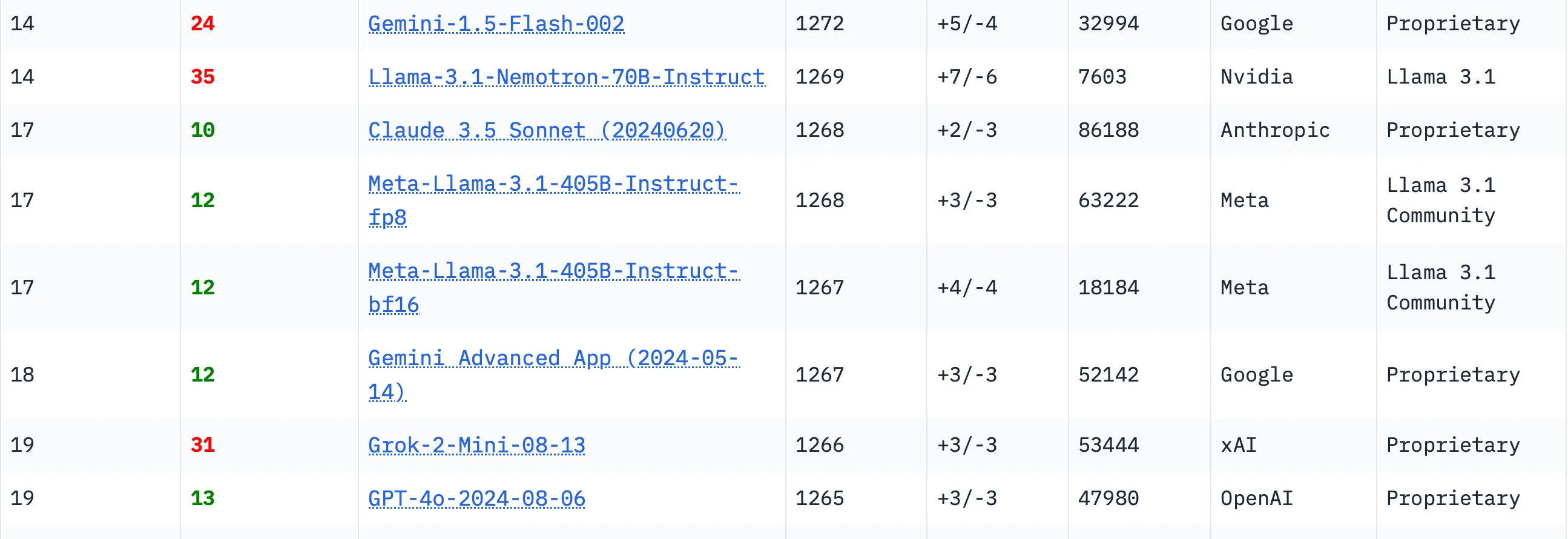

Again, for context, In the LLM Arena, Nvidia’s Llama Nemotron 70b ranks higher than the original Llama 405b developed by Meta. It also beats different versions of Claude, Gemini Advanced, Grok-2 mini and GPT-4o.

Nvidia's agent push is now also related to infrastructure. The company announced partnerships with major agentic tech providers like LangChain, LlamaIndex, and CrewAI to build blueprints on Nvidia AI Enterprise.

These ready-to-deploy templates tackle specific tasks that make it easier for developers to build highly specialized agents.

A new PDF-to-podcast blueprint aims to compete with Google's NotebookLM, while another blueprint helps build video search and summary agents. Developers can test these blueprints through the new Nvidia Launchables platform, which enables one-click prototyping and deployment.

Gamers, rejoice! The New GeForce RTX 5000 Cards Are a Performance Beast

Nvidia saved its gaming announcements for last, unveiling the much-expected GeForce RTX 5000 Series. The flagship RTX 5090 houses 92 billion transistors and delivers 3,352 trillion AI operations per second—double the performance of the current RTX 4090. The entire lineup features fifth-generation Tensor Cores and fourth-generation RT Cores.

The new cards introduce DLSS 4, which can boost frame rates up to 8x by using AI to generate multiple frames per render. Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives,” Jensen Huang said, “fusing AI-driven neural rendering and ray tracing, Blackwell is the most significant computer graphics innovation since we introduced programmable shading 25 years ago.”

The new cards also employ transformer models for super-resolution, promising highly realistic graphics and a lot more performance for their price—which is not cheap, btw: $549 for the RTX 5070, with the 5070 Ti at $749, the 5080 at $999, and the 5090 at $1,999.

If you don’t have that kind of money and want to game, don’t worry.

AMD also announced today its Radeon RX 9070 series. The cards are built on the new RDNA 4 architecture using a 4nm manufacturing process and feature dedicated AI accelerators to compete with Nvidia's tensor cores.

While full specifications remain under wraps, AMD's latest Ryzen AI chips already achieve 50 TOPS at peak performance.

Sadly, Nvidia is still the king of AI applications thanks to its CUDA technology, Nvidia’s proprietary AI architecture.

To tackle this, AMD has secured partnerships with HP and Asus for system integration, and over 100 enterprise platform brands will use AMD Pro technology through 2025.

The Radeon cards are expected to hit the market in Q1 2025, giving Nvidia an interesting battle in both gaming and AI acceleration.

Edited by Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.